Sharing my conference notes and thoughts from a UX and behavioral science perspective after attending the inaugural Generative AI Summit at MIT on March 3, 2023. If you’re exploring similar areas or interested in collaborating on a generative AI project around tools for thought and co-creation, let’s connect.

1. Explainability and Trust

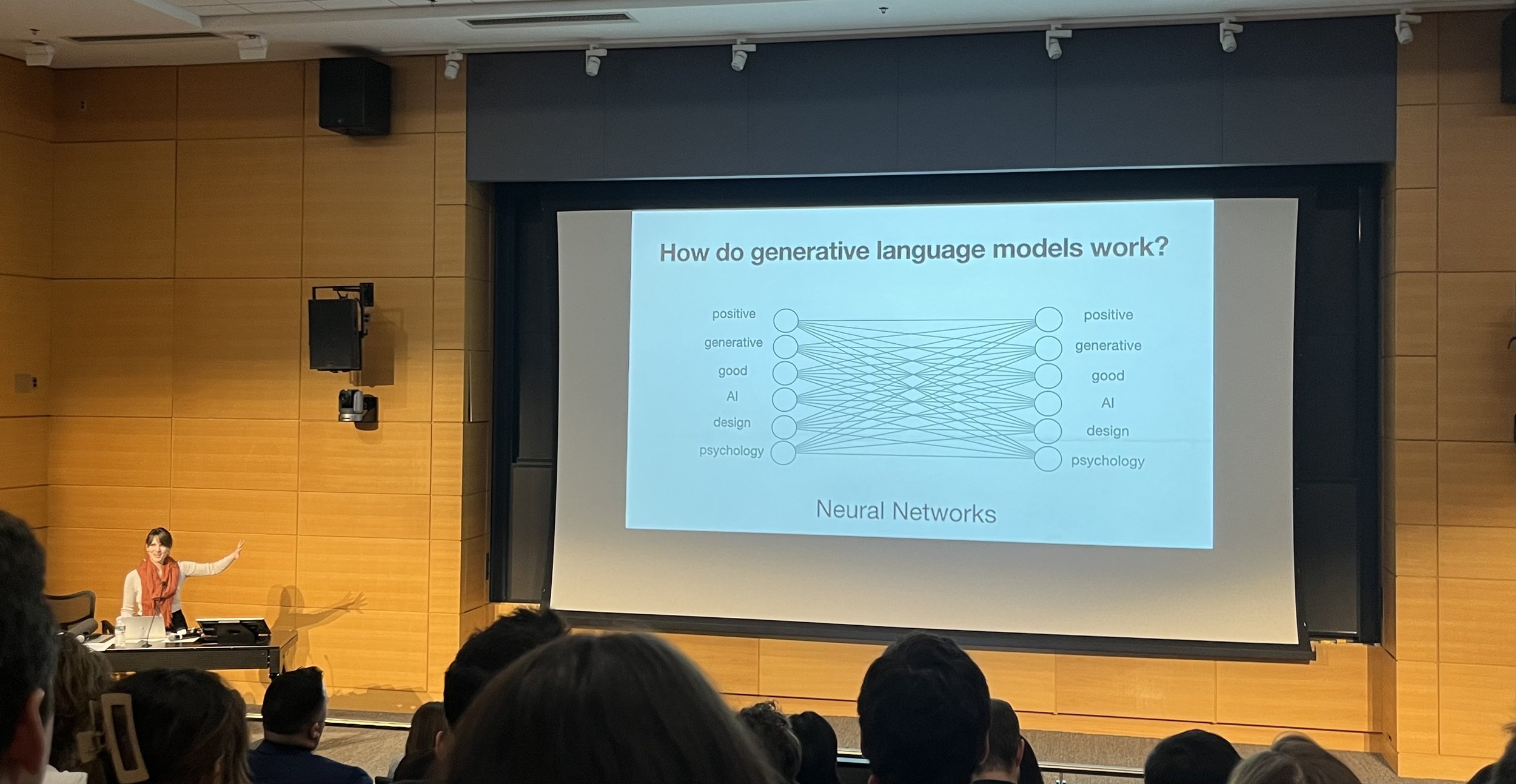

The explainability of an AI system is inherently tied to user trust. To calibrate trust, users must set proper expectations and understand what the AI system can and cannot do, as outlined in the People + AI Guidebook. People learn faster when they can see the system's response to their actions right away. Therefore, it's important to help people understand the cause-and-effect relationship between their actions and the system's response, which fast-tracks human reasoning and learning of the machine's "thought process." As AI increasingly helps solve important tasks in everyday life, how do we deal with the growing complexity of AI systems when we may not understand why they work so well?

In the book Sapiens: A Brief History of Humankind, Harari argues that humans' unique ability to create and spread fictions, leading to shared beliefs/religions and collective imagination, makes large-scale human cooperation possible. Ultimately, the ability to quickly establish trust with many strangers fuels human productivity, institutional stability, and industrialization. In the future, when the cost of producing seemingly correct information becomes minimal due to advances in LLMs (Large Language Models), how do we scale trust?

Ellie Pavlickm, Google Research

Keynote by Eric Schmidt, former CEO of Google

2. User Feedback and Control

AI systems are probabilistic, which means they can give an incorrect or unexpected output at times. Therefore, it's critical to develop the right mechanisms to gather user feedback and give users control. This will improve the AI model's output and ensure the user experience is personalized, valuable, and trustworthy.

When errors occur, we need to help users understand the machine errors and provide controls and alternative paths to navigate. This means new mental models will emerge for how humans interact with machines, requiring new levers and interaction patterns, as emphasized by both Linus Lee and Catherine Havasi during the summit. I am curious to explore new human-centered, participatory design research methods to better understand the future relationship between human input and machine output.

Today, a significant portion of UX research and behavioral science involves studying, modeling, and framing specific contexts of a problem space, so we can better reason which design direction or intervention might be more effective in solving user problems. As explained in the essay The Science of Context, the real skill here is “recognizing and articulating context with enough clarity that it illuminates how small changes (e.g., nudges) will affect behavior.” As we explore new levers and interaction patterns for AI product design, the application of behavioral science could play a bigger role in the product development process.

Panel: The Future of Creation (Linus Lee, Nicole Fitzgerald, and Russell Palmer)

3. Augmenting Creativity

While some may question whether human creativity will decrease with the advances in generative AI, I really like how Linus Lee raised the question of whether humanities have been getting better at making music over the past few centuries. Since the key to expanding creative expression is having an augmented ability to say the things we want to say, I see generative AI as a new tool and lens that can introduce inspiring perspectives during the creation process, much like how cameras facilitated new multi-modal art forms (e.g. films and photographs) over the past few decades.

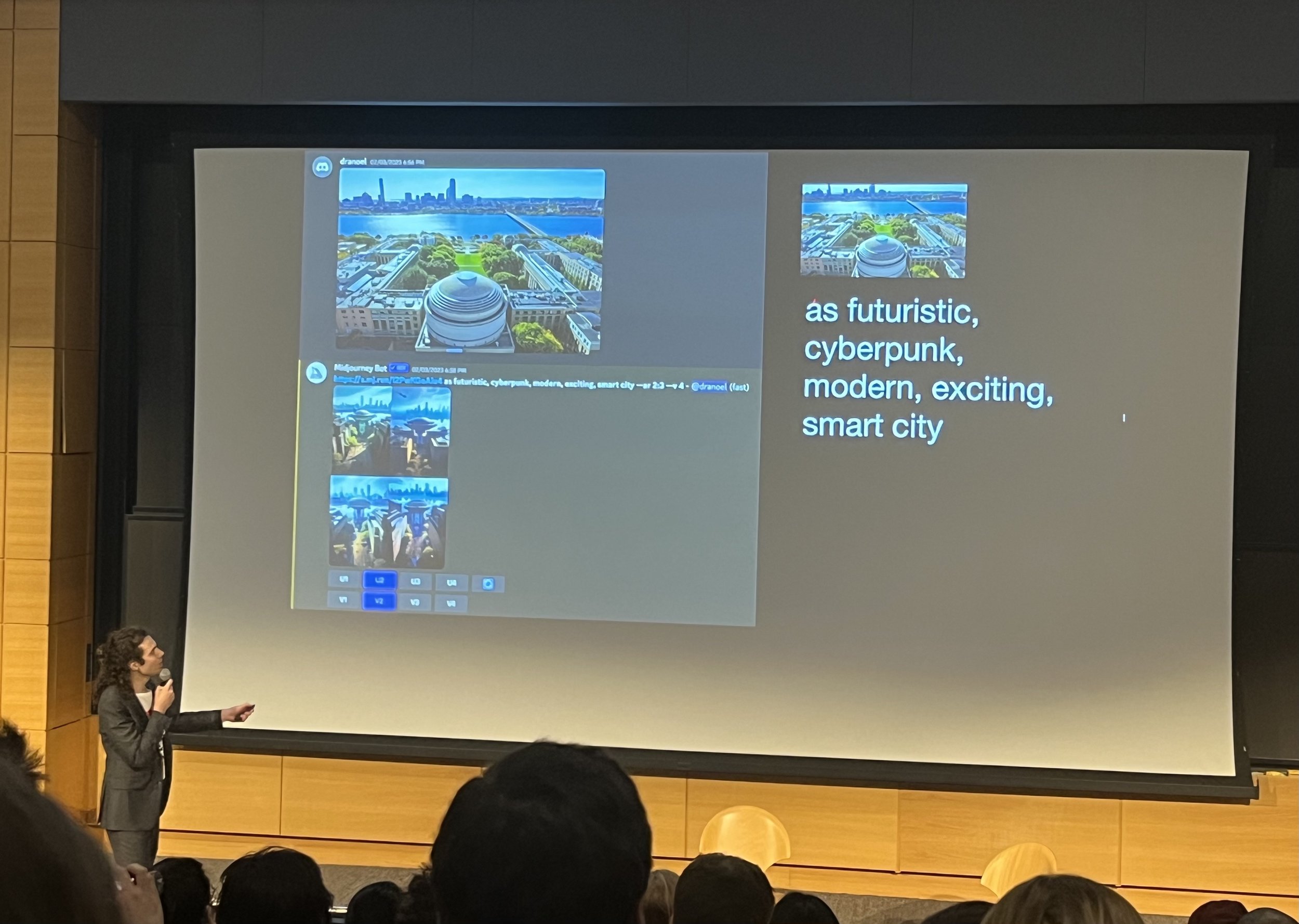

In a previous discussion on creativity that I helped moderate with a group of friends last summer, one key aspect that emerged was the importance of cross-pollination of ideas, which can be facilitated by LLMs through the introduction of serendipity and randomness early in the creation process. In other words, LLMs can help humans quickly explore the unknown. We know that creativity can be fostered conscious actions, which is at the core of human-centered design, emphasizing the process of divergent and convergent thinking. In the future, the creative process may place greater emphasis on elements of chance, selection, and iteration, and augment human decision-making at each step of the human-machine feedback loop.

To effectively interact with generative AI models, it is critical to also augment our ability to accurately communicate what we want and develop clarity of thought, so we can be more capable in the co-creation process of prompt engineering. For example, how might we better describe voice and texture? People need to get better at communicating exactly what they want, whether it is a high-level concept or the specific way we want audio or images to be produced when interacting with LLMs.

4. Emergent Value Systems

With generative AI models now capable of quickly producing vast amounts of open-ended, creative, and multi-modal content, our tastes, values, and evaluation criteria as a society will also evolve. As emphasized by Nicole Fitzgerald during the summit, the ability to curate and fine-tune this content with taste will become critical. We can already see the education industry's evaluation system as one of the first to respond to this impact.

I'm optimistic that social curation and co-creation with collaborators, including both humans and machines, will play a big role in future information discovery processes. Content curation from trusted networks will be key in helping people discover, filter, and digest useful information.

During his keynote speech, Eric Schmidt emphasized the need for new thinkers and interpreters with multidisciplinary backgrounds in both science and art (e.g. math and philosophy) to help people understand the implications of the new AI-powered reality for society. I strongly believe in the value of multidisciplinary conversations, and this reminds me of Robert Pirsig's argument in his book Zen and the Art of Motorcycle Maintenance. Pirsig distinguishes between two kinds of understanding of the world: classical and romantic. Classical understanding sees the world primarily in terms of the underlying form itself, with the goal of making the unknown known and bringing order out of chaos. Romantic understanding, on the other hand, sees the world in terms of immediate appearance, and is primarily inspirational, imaginative, creative, and intuitive.

Currently, there is a lack of reconciliation between classical and romantic understanding. In the case of motorcycle maintenance, for example, although motorcycle riding is romantic, motorcycle maintenance is purely classical. The problem is that people tend to think and feel exclusively in one mode or the other, which can lead to misunderstandings and underestimations of what the other mode is all about. The real reunification of art and technology is long overdue.

Léonard Boussioux, MIT Operations Research PhD

Thanks for reading! If you have any comments or feedback on this blog post or are interested in collaborating on a generative AI project, I would love to connect. Please feel free to email me.